Demonstrations

Demonstrations - Batch 1

Wednesday, September 10, 3:00-3:45PM, Magistrale

[DEMO] User Friedly Calibration and Tracking for Optical Stereo See-Through Augmented Reality

Authors: Folker Wientapper, Timo Engelke, Jens Keil, Harald Wuest and Johanna Mensik

An important problem in see-through AR is the calibration of the optical see-through HMD (OST-HMD) to individual users. We propose to divide the calibration into two separate stages. In an offline stage all user-independent parameters are retrieved, in particular the exact location and scaling of the two virtual display planes for each eye with respect to the coordinate frame of the OST-HMD built-in camera. In an online stage the calibration is fine-tuned to user-specific parameters, such as the individual eye-separation distance and height.

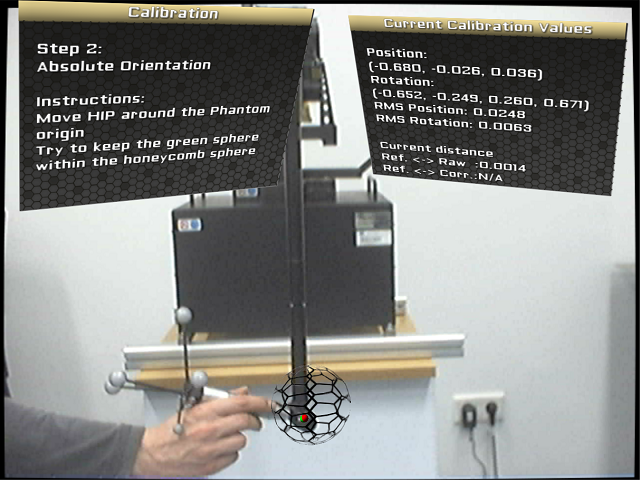

[DEMO] Comprehensive Workspace Calibration for Visuo-Haptic Augmented Reality

Authors: Ulrich Eck, Frieder Pankratz, Christian Sandor, Gudrun Klinker and Hamid Laga

In our science and technology paper ”Comprehensive Workspace Calibration for Visuo-Haptic Augmented Reality”, ISMAR 2014, we present an improved workspace calibration that additionally compensates for errors in the gimbal sensors. This demonstration showcases the complete workspace calibration procedure as described in our paper including a mixed reality demo scenario, which allows users to experience the calibrated workspace. Additionally, we demonstrate an early stage of our proposed future work in improved user guidance during the calibration procedure using visual guides.

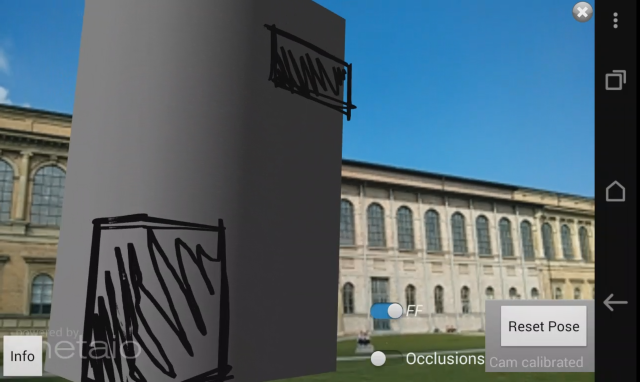

[DEMO] On-Site Augmented Collaborative Architecture Visualization

Authors: David Schattel, Marcus Tönnis, Gudrun Klinker, Gerhard Schubert and Frank Petzold

With this demonstration, we get one step further and provide an interactive visualization at the proposed building site, further enhancing collaboration between different audiences. Mobile phones and tablet devices are used to visualize marker-less registered virtual building structures and immediately show changes made to the models in the Collaborative Design laboratory. This way, architects can get a direct impression about how a building will integrate within the environment and residents can get an early impression about future plans.

[DEMO] Mobile Augmented Reality – 3D Object Selection and Reconstruction with an RGBD Sensor and Scene Understanding

Authors: Daniel Wagner, Gerhard Reitmayr, Alessandro Mulloni, Erick Mendez and Serafin Diaz

In this proposal we show case two 3D reconstruction systems running in real-time on a tablet equipped with a depth sensor. We believe that the proposed set of demonstrations will engage ISMAR attendees both in terms of tracking technology and user experience. Both demos show state-of-the art 3D reconstruction technology and give attendees a chance to try hands-on our tracking with simple and interactive user interfaces.

[DEMO] High volume offline image recognition

Authors: Tomasz Adamek, Luis Martinell, Miquel Ferrarons, Alex Torrents and David Marimon

We show a prototype of an offline image recognition engine, running on a tablet with Intel® Atom™ processor, searching within less than 250ms through thousands (5000+) of images. Moreover, the prototype still offers the advanced capabilities of recognising real world 3D objects, until now reserved only for cloud solutions. Until now image search within large collections of images could be performed only in the cloud, requiring mobile devices to have Internet connectivity. However, for many use cases the connectivity requirement is impractical, e.g. many museums have no network coverage, or do not want their visitors incurring expensive roaming charges.

[DEMO] Markerless Augmented Reality Solution for Industrial Manufacturing

Authors: Boris Meden, Sebastian Knoedel and Steve Bourgeois

We present a comprehensive augmented reality solution to efficiently perform different verification pocedures during industrial assembly-line manufacturing using CAD data from product lifecycle management (PLM) systems. The demonstration focuses on a variety of industrial use-cases that have to go through different control processes, assisted by augmented reality tools. Thus, the experience has to be precise, robust to natural movements and easy to realise for an assembly-line technician.

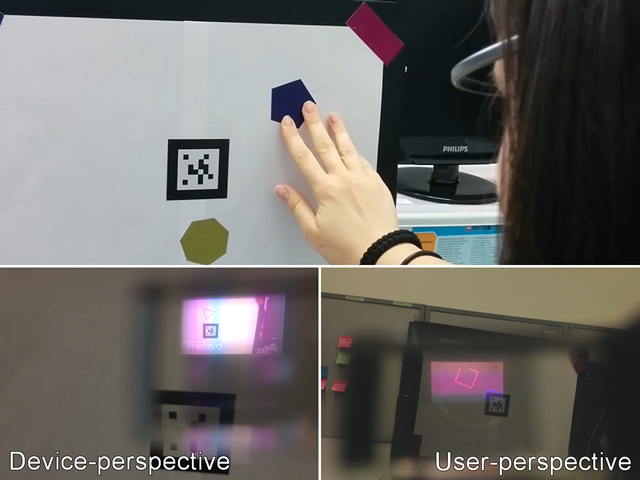

[DEMO] Device vs. User-Perspective Rendering in AR applications for Monocular Optical See-Through Head-Mounted Displays

Authors: João Paulo Lima, Rafael Roberto, João Marcelo Teixeira and Veronica Teichrieb

This demonstration allows visitors to use AR applications for monocular optical see-through head-mounted displays with two forms of visualization. One is the device-perspective approach, in which the user sees the virtual content registered with the camera image at the display. The other is the user-perspective method, in which the display is used as a de facto optical see-through device and the virtual content is registered with the real world.

[DEMO] G-SIAR: Gesture-Speech Interface for Augmented Reality

Authors: Thammathip Piumsomboon, Adrian Clark and Mark Billinghurst

We demonstrate an Augmented Reality (AR) system that utilizes a combination of direct free hand interaction and indirect multimodal gesture and speech interface. A three-dimensional (3D) design sandbox application, featuring online object creation, has been developed to illustrate the use case of our system that supports dual interaction techniques.

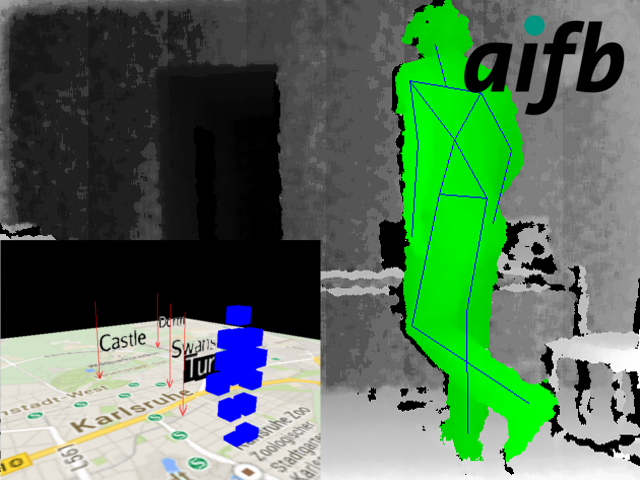

[DEMO] Integrating Highly Dynamic RESTful Linked Data APIs in a Virtual Reality Environment

Authors: Felix Leif Keppmann, Tobias Käfer, Steffen Stadtmüller, René Schubotz and Andreas Harth

We demonstrate a Virtual Reality information system that shows the applicability of REST in highly dynamic environments as well as the advantages of Linked Data for on-the-fly data integration. We integrate a motion detection sensor application to remote control an avatar in the Virtual Reality. In the Virtual Reality, information about the user is integrated and visualised. Moreover, the user can interact with the visualised information.

[DEMO] The Fashion Studio

Authors: Alin Popescu

In this demo we present a revolutionary product in the field of augmented reality that takes shopping a step closer to the future by enabling users to try clothes in front of a TV. It uses a Microsoft Kinect to capture users’ skeleton and motion to display cloths in front on them. The clothes are 3D mesh models created by artists that are realistically rendered using bump mapping, color maps of the surrounding environment and ambient occlusion using real time ray tracing. They are realistically animated using skinning, skinned cloth or physically simulated cloth.

[DEMO] Plucky: Plucking Data At the Flick of a Wrist

Authors: Hafez Rouzati, Amber Standley and John Murray

Plucky is a unique “life-sized” augmented visualization experience - by leveraging the SeeBright smartphone-based head mounted display technology, 3D joystick inter-actions, and virtual displays, Plucky allows users to explore & visualize trending from search and social media data sources in any number of geographical locations and build scrapbooks using content “plucked” from any number of augmentations presented about the user.

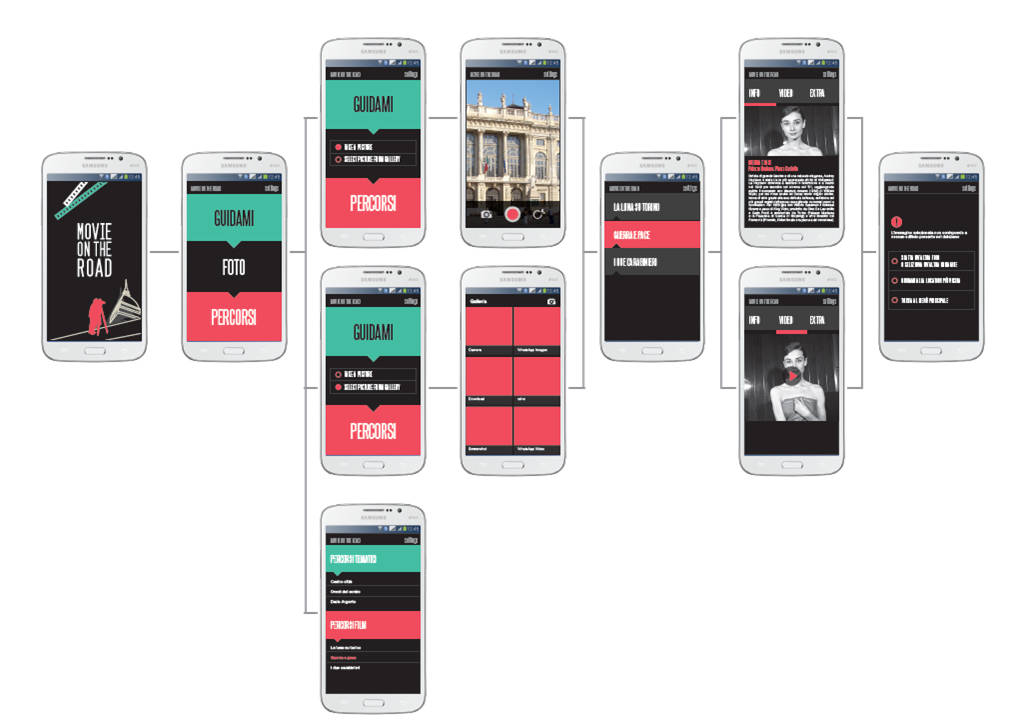

[DEMO] Movie On the Road: a Multimedia-Augmented Tourist Experience with CDVS Descriptors

Authors: Giovanni Ballocca, Attilio Fiandrotti, Marco Gavelli, Massimo Mattelliano, Michele Morello, Alessandra Mosca and Paolo Vergori

We present Movie On The Road, an Android application which allows a tourist to explore a urban environments through his smartphone camera. We exploit the recently standardized MPEG CDVS technology to identify the user location and specific landmarks around him with the assistance of a remote server. The server identifies the user location and returns to the user multimedia contents related to location or specific landmarks in the surroundings.

Demonstrations - Batch 2

Thursday, September 11, 1:00-1:30PM, Magistrale

[DEMO] MRI Design Review System - A Mixed Reality Interactive Design Review System for Architecture, Serious Games and Engineering using Unity3D, a Tablet Computer and Natural Interfaces

Authors: Andreas Behmel, Wolfgang Höhl and Thomas Kienzl

It is a solution which makes the creation and presentation of interactive 3D applications as simple as preparing a powerpoint presentation. Without any programming skills you can easily manipulate 3D models within standard software applications. Control, change or adapt your design easily and interact with 3D models by natural interfaces and standard handheld devices. Navigate in 3D space using only your tablet computer.

[DEMO] Thermal Touch: Thermography-Enabled Everywhere Touch Interfaces for Mobile Augmented Reality Applications

Authors: Daniel Kurz

We present an approach that makes any real object a touch interface for mobile AR applications. Using infrared thermography, we detect residual heat resulting from a warm fingertip touching the colder surface of an object. Once a touch has been detected in the thermal image, we determine the corresponding 3D position on the touched object based on object tracking using a visible light camera. This 3D position then enables user interfaces to naturally interact with real objects and associated digital information.

[DEMO] Insight: Webized Mobile AR and Real-life Use Cases

Authors: Sangchul Ahn, Joohyun Lee, Jinwoo Kim, Sungkuk Chun, Jungbin Kim, Iltae Kim, Junsik Shim, Byounghyun Yoo and Heedong Ko

This demonstration shows a novel approach for Webizing mobile augmented reality, which uses HTML as its content structure, and its real-life use cases. With this approach, mobile AR applications can be seamlessly developed as common HTML documents under the current Web eco-system. The advantages of the webized mobile AR and its high productivity are demonstrated with real-life use cases in various domains, such as shopping, entertainment, education, and manufacturing.

[DEMO] Towards Augmented Reality User Interfaces in 3D Media Production

Authors: Max Krichenbauer, Goshiro Yamamoto, Takafumi Taketomi, Christian Sandor and Hirokazu Kato

For this demo, we present an Augmented Reality (AR) User Interface (UI) for the 3D design software Autodesk Maya, aimed at professional media creation. A user wears a head-mounted display (HMD) and thin cotton gloves which allow him to interact with virtual 3D models in the work area. Additional viewers can see the video stream on a projector and thus share the users view.

[DEMO] Dense Planar SLAM

Authors: Renato Salas-Moreno, Ben Glocker, Paul Kelly and Andrew Davison

Using higher-level entities during mapping has the potential to improve camera localisation performance and give substantial perception capabilities to real-time 3D SLAM systems. We present an efficient new real-time approach which densely maps an environment using bounded planes and surfels. Our method offers the every-pixel descriptive power of the latest dense SLAM approaches, but takes advantage directly of the planarity of many parts of real-world scenes via a data-driven process to directly regularize planar regions and represent their accurate extent efficiently using an occupancy approach with on-line compression.

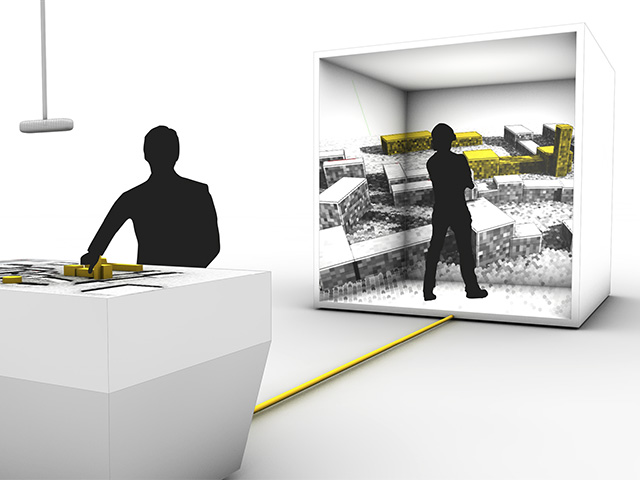

[DEMO] The Collaborative Design Platform Protocol – A Protocol for a Mixed Reality Installation for Improved Incorporation of Laypeople in Architecture

Authors: Tibor Goldschwendt, Christoph Anthes, Gerhard Schubert, Dieter Kranzlmüller and Frank Petzold

Our project shows, how linking established design tools (models, handsketches) and digital VR representation has given rise to a new interactive presentation platform that bridges the gap between analogue design methods and digital architectural presentation. The prototypical setup creates a direct connection between physical volumetric models and a 5-sided CAVE and offers an entirely new mean of VR-presentation and direct interaction of the architectural content with tangible devices.

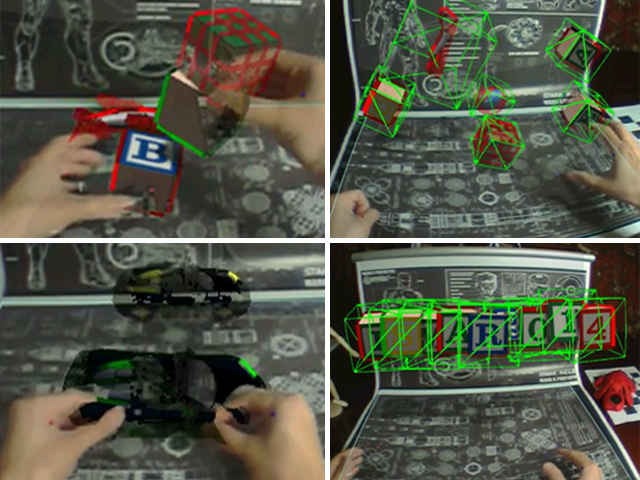

[DEMO] Mobile Augmented Reality – Tracking, Mapping and Rendering

Authors: Daniel Wagner, Gerhard Reitmayr, Alessandro Mulloni, Erick Mendez and Serafin Diaz

In this proposal we suggest a set of demonstrations to be presented at ISMAR 2014 around the topic of monocular tracking, mapping and rendering on mobile phones. We believe that the proposed set of demonstrations will engage ISMAR attendees both in terms of tracking technology and user experience. On the one hand, all demos show state-of-the art tracking and mapping technology and gives attendees a chance to try hands-on our tracking solutions and to discuss them with our researchers and developers.

[DEMO] A complete interior design solution with diminished reality

Authors: Sanni Siltanen, Henrikki Saraspää and Jari Karvonen

We demonstrate an interior design solution with advanced features, most importantly, a diminished reality feature. The diminished reality functionality takes 3D indoor structures into account and adapts to the lighting of the environment. We present two demonstrations on tablet and laptop. The iPad version utilizes touch screen for selecting removed objects from the image. It allows users to build modular furniture and it casts shadows of the virtual furniture for realistic visualization result. On laptop PC we demonstrate real time diminished reality for indoor AR with several options. Our demonstrations are optimized for interior design and indoor AR.

[DEMO] Exploring multimodal interaction techniques for a mixed reality digital surface

Authors: Martin Fischbach, Chris Zimmerer, Anke Giebler-Schubert and Marc Erich Latoschik

Quest – XRoads is a multimodal and multimedia mixed reality version of the traditional role-play tabletop game Quest: Zeit der Helden. The original game concept is augmented with virtual content to permit a novel gaming experience. The demonstration consists of a turn-based skirmish, where players have to collaborate, control heroes or villains and use their abilities via speech, gesture, touch as well as tangible interactions.

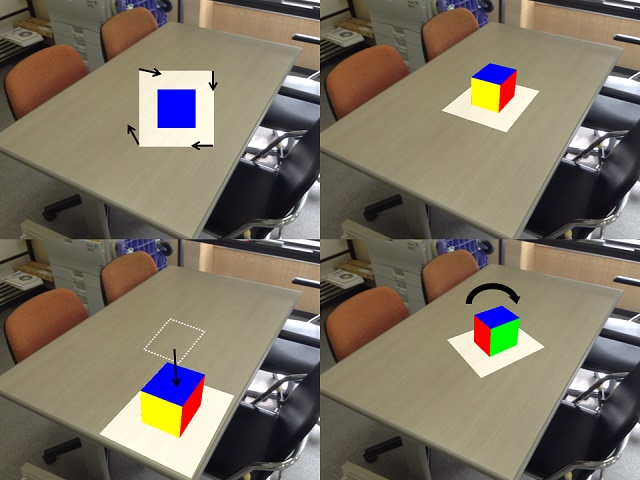

[DEMO] Tablet system for visual overlay of 3D virtual object onto real environment

Authors: Hiroyuki Yoshida, Takuya Okamoto and Hideo Saito

We propose a novel system for visual overlay of 3D virtual object onto real environment observed by tablet PC with camera. This system allows us to visually simulate the layout of virtual 3D objects in the real environment captured by the tablet PC. For estimating the pose and position of the tablet PC, we propose and implement procedures using the captured image and using the motion sensor.

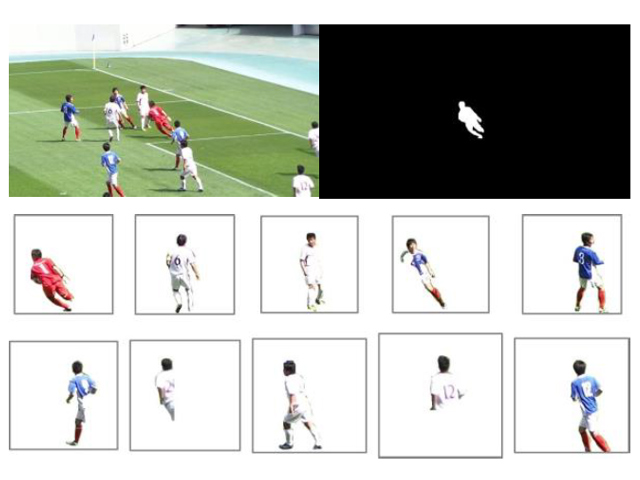

[DEMO] Displaying Free-viewpoint Video with User Controlable Head Mounted Display DEMO

Authors: Yuko Yoshida and Tetsuya Kawamoto

We propose a method to experience a free-viewpoint video and image with a head mounted display (HMD) and a game controller that enable to operate it intuitively. The free-viewpoint video is generated by multiple 4K resolution cameras in sport games such as soccer and american football. This method can provide us a player’s perspective. We adopt a billboard method to make a free-viewpoint video which is consisted of multiple textures according to user specified viewpoint.

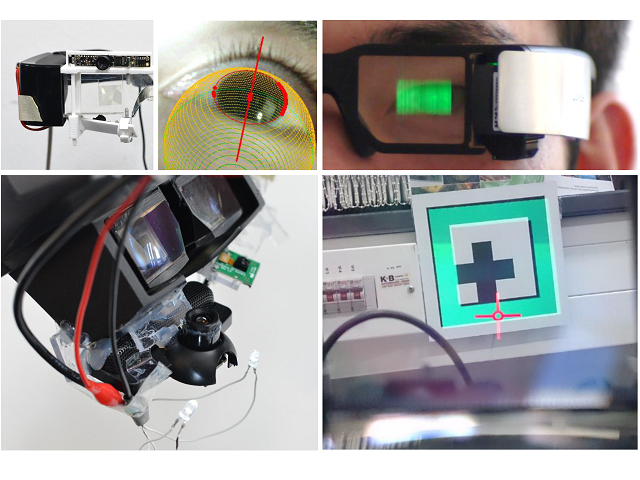

[DEMO] Interaction-Free Calibration for Optical See-through Head-mounted Displays based on 3D Eye Localization

Authors: Yuta Itoh and Gudrun Klinker

This demonstration showcases INDICA , an automatic OSTHMD calibration approach presented in our previous work and ISMAR 2014 paper. The method calibrates the display to the user’s current eyeball position by combining online eye-position tracking with offline parameters. Visitors of our demonstration can try our both manual calibration and our interaction-free calibration on a customized OST-HMD.

[DEMO] On the Use of Augmented Reality Techniques in a Telerehabilitation Environment for Wheelchair Users’ Training

Authors: Daniel Caetano, Fernando Mattioli, Edgard Lamounier and Alexandre Cardoso

This work's purpose is to investigate the use of Augmented Reality techniques on telerehabilitation, applied to wheelchair users training. In this scenario, using a computer with unconventional devices, the user will be connected to a remote training space and will be able to issue commands, in order to accomplish the execution of training exercises. The telerehabilitation environment should reproduce the main challenges faced by wheelchair users in their daily activities.

[DEMO] Tracking Texture-less, Shiny Objects with Descriptor Fields

Authors: Alberto Crivellaro, Yannick Verdie, Kwang Yi, Pascal Fua and Vincent Lepetit

Our demo demonstrates the method we published at CVPR this year [Crivellaro CVPR'14] for tracking specular and poorly textured objects. Instead of detecting and matching local features, we retrieve the pose in the input images by aligning them with a reference image exploiting dense optimization techniques. Our main contribution is an efficient novel local descriptor that can be used in place of the intensities to make the alignment much more robust. Our demo will let visitors experiment with it and with their own patterns.

Demonstrations - Batch 3

Friday, September 12, 10:30-11:00AM, Magistrale

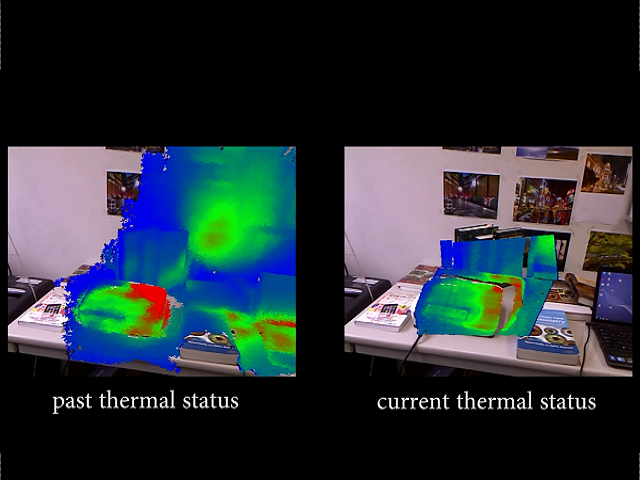

[DEMO] RGB-D-T Camera System for AR Display of Temperature Change

Authors: Kazuki Matsumoto, Wataru Nakagawa, Francois Sorbier, Maki Sugimoto, Hideo Saito, Shuji Senda, Takashi Shibata and Akihiko Iketani

The anomalies of power equipment can be founded using temperature changes compared to its normal state. In this paper we present a system for visualizing temperature changes between the thermal 3D model and the current status of the temperature from any viewpoint. Our approach is based on precomputed 3D RGB model and 3D thermal model of the target scene achieved with a RGB-D camera coupled with the thermal camera.

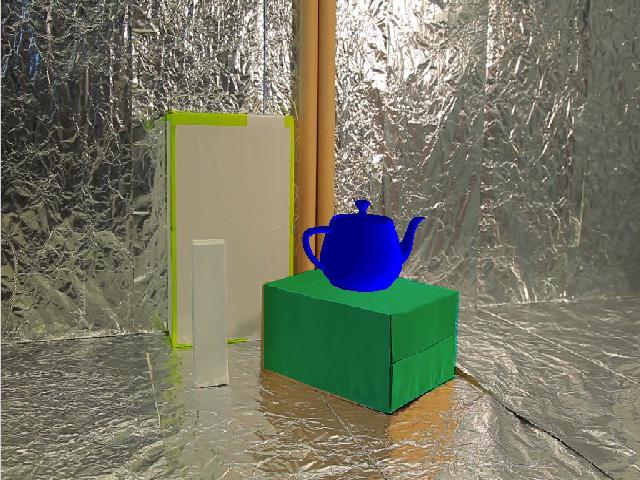

[DEMO] Real-Time Illumination Estimation from Faces for Coherent Rendering

Authors: Sebastian B. Knorr and Daniel Kurz

We showcase a method for estimating the real-world lighting conditions within a scene based on the visual appearance of the user's face captured in a single image of a monocular user-facing camera. The light reflected from the face towards the camera is measured and the most plausible real-world lighting condition explaining the measurement is estimated in real-time based on knowledge acquired in an offline step. The estimated illumination is then used for rendering virtual objects.

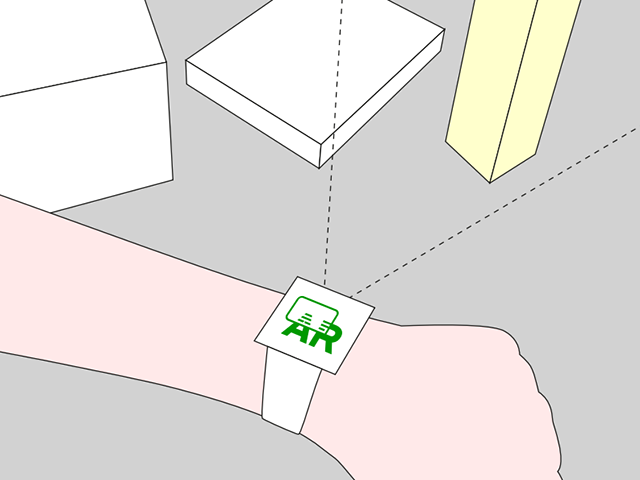

[DEMO] Smartwatch-Aided Handheld Augmented Reality

Authors: Darko Stanimirovic and Daniel Kurz

We demonstrate a novel method for interaction of humans with real objects in their surrounding combining Visual Search and Augmented Reality (AR). This method is based on utilizing a smartwatch tethered to a smartphone, and it is designed to provide a more user-friendly experience compared to approaches based only on a handheld device, such as a smartphone or a tablet computer.

[DEMO] Placing Information near to the Gaze of the User

Authors: Marcus Tönnis and Gudrun Klinker

Gaze tracking facilities have yet mainly been used in general for marketing or the disabled and, more specifically, in Augmented Reality, for interaction with control triggers, such as buttons. We go one step further and use the line of sight of the user to attach information. While any information may not conceal the view of the user, we displace the information by an angular degree and provide means for the user to capture the information by looking at it.

[DEMO] A Mobile Augmented Reality System for Portion Estimation

Authors: Thomas Stütz, Radomir Dinic, Michael Domhardt and Simon Ginzinger

Accurate assessment of nutrition information is an important part in the prevention and treatment of a multitude of diseases, but remains a challenging task. We present a novel mobile augmented reality application, which assists users in the nutrition assessment of their meals. The user sketches the 3D form of the food and selects the food type. The corresponding nutrition information is automatically computed.

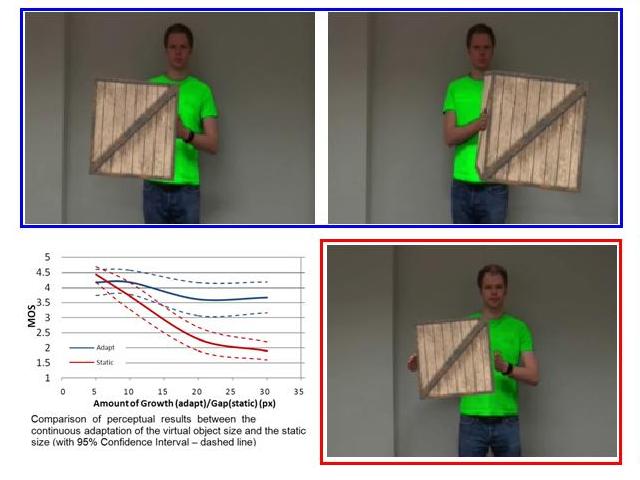

[DEMO] Measurement of Perceptual Tolerance for Inconsistencies within Mixed Reality Scenes

Authors: Gregory Hough, Ian Williams and Cham Athwal

This demonstration is a live example of the experiment presented in [1], namely a method of assessing the visual credibility of a scene where a real person interacts with a virtual object in realtime. Inconsistencies created by actor's incorrect estimation of the virtual object are measured through a series of videos, each containing a defined visual error and rated against interaction credibility on a scale of 1-5 by conference delegates.

[DEMO] Adventurous Dreaming Highflying Dragon: A Full Body Game for Children with Attention Deficit Hyperactivity Disorder (ADHD)

Authors: Yasaman Hashemian, Marientina Gotsis and David Baron

Adventurous Dreaming Highflying Dragon is a full body-driven, game prototype for children ages 6-8 with a diagnosis of Attention Deficit Hyperactivity Disorder (ADHD). The current prototype incorporates research evidence showing that physical activity can help improve ADHD-related symptoms. Physical activity is integrated with cognitively challenging tasks that may improve brain activity by encouraging goal planning and dedication. The current prototype is includes three mini-games, each of which teaches skills with real-life use potential.

[DEMO] “It’s a Pirate’s Life” AR game

Authors: David Molyneaux and Selim Benhimane

It’s a Pirate’s Life is an Augmented Reality (AR) game making use of tablet-based real-time 3D reconstruction and tracking to build a dynamic game world. Players captain a pirate ship searching for gold on a virtual sea overlaid on the real-world. Real-world objects become part of the play space; islands in the tropical seas to navigate your ships around while avoiding cannon balls and sea monsters, to find the treasure.

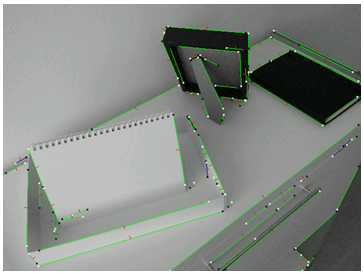

[DEMO] Fast Vision-based Multiplanar Scene Modeling in Unprepared Environments

Authors: Javier Vigueras

We present an AR approach that is suitable for many man-made environments and quickly gives a rough 3D model of planar piecewise scenes from two adequate images, without any a priori knowledge about the scene or camera motion. The method differs from classical structure from motion approaches: it deals with a few consistent coplanar sets of features instead of storing a map of hundreds of features.

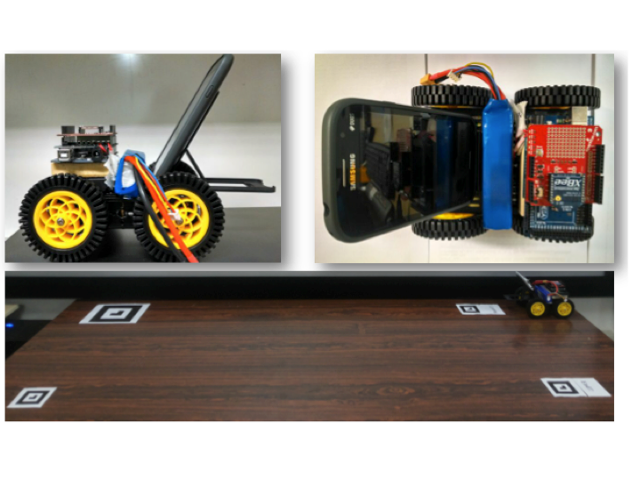

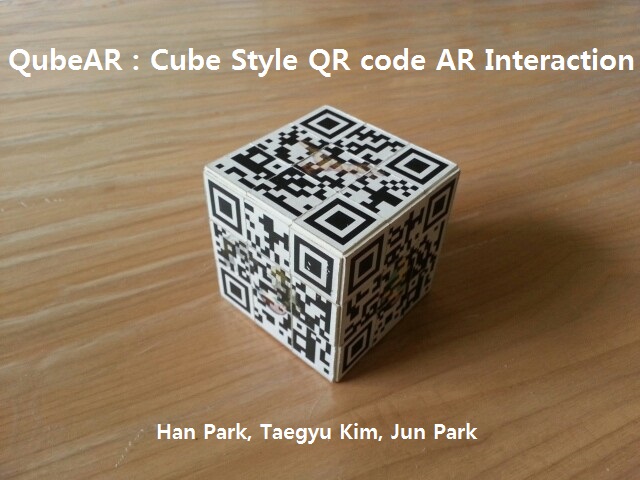

[DEMO] QubeAR : Cube Style QR code AR Interaction

Authors: Han Park, Taegyu Kim and Jun Park

QRcode, for its recognition robustness and data capacity, has been often used for AR applications. However, it is difficult to enable tangible interactions. It is because QRcodes are automatically generated by the rules, and are not easily modifiable. Our goal was to enable QRcode based Augmented Reality interactions. In this demo, we introduced a prototype for QRcode based Augmented Reality interactions, which allows for Rubik’s cube style rolling interactions.

[DEMO] Towards User Perspective Augmented Reality for Public Displays

Authors: Jens Grubert, Hartmut Seichter and Dieter Schmalstieg

We demonstrate ad-hoc augmentation of public displays on handheld devices, supporting user perspective rendering of display content. Our prototype system only requires access to a screencast of the public display, which can be easily provided through common streaming platforms and is otherwise self-contained. Hence, it easily scales to multiple users.

[DEMO] Sticky Projections - A new approach to Interactive Shader Lamp Tracking

Authors: Christoph Resch

Shader lamps can augment physical objects with projected virtual replications using a camera-projector system. In this demo, we present a new method for tracking arbitrarily shaped physical objects interactively. In contrast to previous approaches our system is mobile and makes solely use of the projection of the virtual replication to track the physical object and "stick" the projection to it at interactive rates.

[DEMO] An X-Ray visualization metaphor for automotive Surround View.

Authors: Juri Platonov, Thomas Gebauer and Pawel Kaczmarczyk

We present an HMD-based system for improvement of the situational awareness in a vehicle. The system utilizes four wide-angle video cameras in order to create the impression of a (semi) transparent vehicle allowing the perception of the usually hidden parts of the environment. This turns out to be a very intuitive visualization metaphor. The use cases in the automotive area include parking and rough terrain situations.

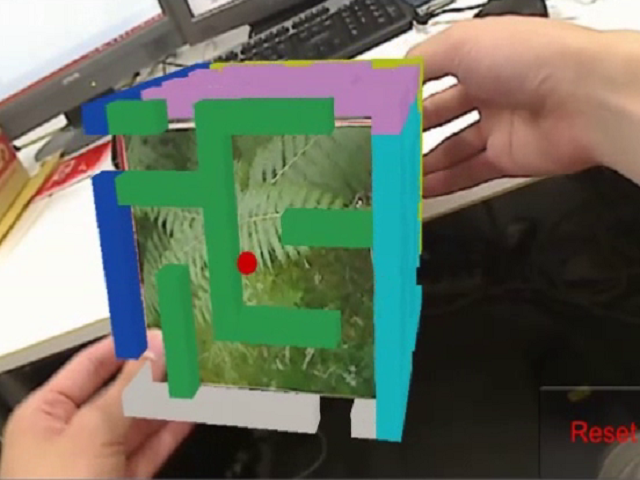

[DEMO] AR Box Maze

Authors: Shogo Miyata, Naoto Ienaga, Jaejun Lee, Taichi Sono, Shuma Hagiwara, Maki Sugimoto and Hideo Saito

Our “AR Box Maze” is augmented reality maze game. Ball and stage are superimposed on the surface of the box. Operation of the ball is only slanting the box without mouse or keyboard. Therefore we can play this game intuitively.